Have you ever watched a video online and felt that split-second unease—something feels real, but also not quite right? That moment of doubt is often your first encounter with deepfake technology, even if you didn’t know it at the time. In the last few years, deepfakes have quietly crossed from obscure research labs into everyday life: viral videos, political controversies, customer support voice bots, movie de-aging, and even scams targeting ordinary people.

Deepfake technology matters because it sits at a rare intersection of creativity, power, and risk. On one hand, it enables filmmakers to resurrect historical figures, helps brands localize content globally, and allows people who’ve lost their voices to speak again. On the other, it can undermine trust, spread misinformation, and cause real personal harm when misused.

In this guide, you’ll get a grounded, real-world explanation of deepfake technology—without hype or fearmongering. We’ll break down how it works in plain language, where it’s genuinely useful, how professionals create deepfakes step by step, which tools are worth your time, and the common mistakes that separate ethical use from disasters. By the end, you’ll understand not just what deepfake technology is, but how to think about it wisely.

What Is Deepfake Technology? A Clear, Human Explanation

Deepfake technology is a form of synthetic media created using artificial intelligence to generate or alter images, videos, or audio so they appear convincingly real. The word “deepfake” combines deep learning and fake, but that name oversimplifies what’s actually happening under the hood.

Think of it like this: imagine teaching a highly skilled art student to mimic one specific person. You show them hundreds—or thousands—of photos and videos. Over time, they learn how that person smiles, blinks, speaks, tilts their head, and expresses emotion. Deepfake models do the same thing, except they learn from data at machine speed.

At the technical core are neural networks, particularly Generative Adversarial Networks (GANs) and diffusion-based models. One part of the system generates fake content, while another part critiques it, constantly pushing the output toward realism. This feedback loop is why modern deepfake technology looks dramatically better than early versions from just a few years ago.

Deepfakes aren’t limited to faces. Today, the term covers:

- Face swapping in video

- Voice cloning and speech synthesis

- Full-body motion transfer

- Lip-syncing across languages

- Entirely synthetic humans that don’t exist

What makes deepfake technology so powerful—and unsettling—is that it exploits the same signals humans rely on to decide what’s real. When those signals can be artificially reproduced, trust becomes fragile.

How Deepfake Technology Actually Works (Without the Jargon)

Understanding how deepfake technology works doesn’t require a computer science degree. At a high level, it follows a predictable process that mirrors human learning.

First, the system collects data. This might be images of a face from different angles, lighting conditions, and expressions, or voice recordings capturing tone, pacing, and emotion. The quality and diversity of this data directly affect the final result.

Next comes training. The AI analyzes patterns—how facial muscles move, how light reflects off skin, how a voice rises at the end of a question. Over time, the model builds a mathematical representation of that person.

Then comes synthesis. When you feed the system new input—a different face, a new script, or another video—it applies what it learned to generate realistic output that matches the target identity.

Finally, post-processing smooths imperfections. Color correction, frame blending, and audio cleanup make the result feel seamless to human viewers.

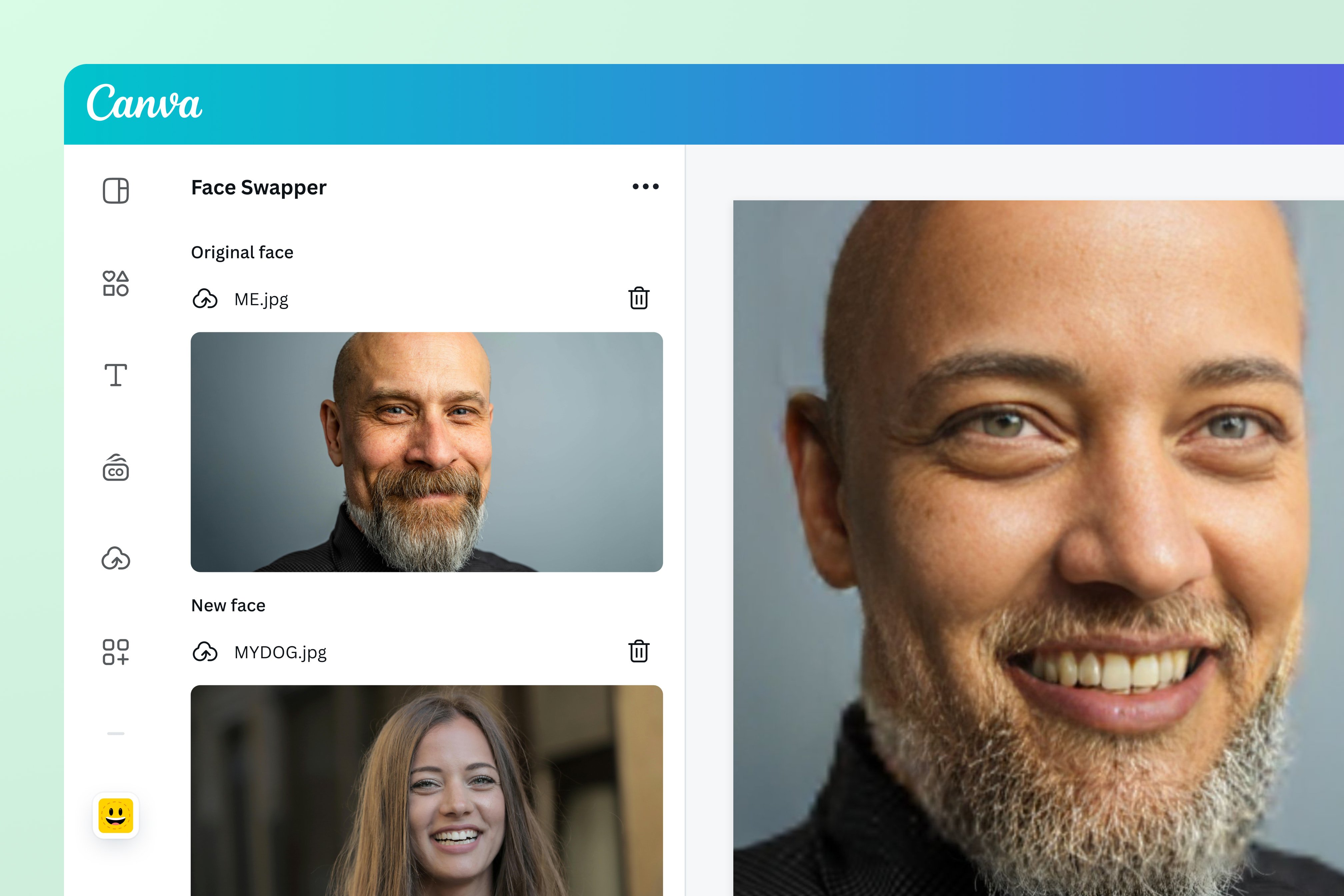

This pipeline explains why ethical deepfake creation takes time and expertise. The “one-click” apps you see online usually trade quality and control for convenience, often with questionable data practices behind the scenes.

Benefits and Real-World Use Cases of Deepfake Technology

Despite the controversy, deepfake technology delivers real value when used responsibly. Many industries already rely on it quietly, without calling it a “deepfake.”

In entertainment, filmmakers use deepfake-style techniques to de-age actors, dub performances into multiple languages while preserving lip movement, or complete scenes when actors are unavailable. Studios collaborating with companies connected to organizations like Adobe are increasingly blending AI-generated facial performance with traditional visual effects.

In education and training, deepfake technology enables personalized instructors. Imagine a medical student practicing bedside communication with a realistic virtual patient who reacts emotionally and verbally in real time. That’s not science fiction—it’s already in pilot programs.

Marketing teams use synthetic spokespeople to localize content across languages without reshooting videos. Customer service departments deploy voice clones that sound natural, empathetic, and consistent, improving user experience without replacing human oversight.

Accessibility may be the most powerful application. People who’ve lost their ability to speak due to illness can use voice cloning trained on old recordings to communicate in their own voice again. In these moments, deepfake technology feels less like deception and more like restoration.

Step-by-Step: How Deepfake Technology Is Created in Practice

Creating a responsible deepfake isn’t magic—it’s a disciplined process. Professionals follow structured steps to maintain quality and ethics.

Step one is consent and purpose definition. Before collecting data, ethical creators document permission and clarify how the content will be used. This step alone separates legitimate projects from exploitative ones.

Step two is data collection. High-resolution images or clean audio samples dramatically improve results. Consistency matters more than sheer volume.

Step three is model selection and training. Depending on the task—face swapping, voice synthesis, or full avatar creation—different architectures are chosen. Training can take hours or days, depending on complexity.

Step four is generation and testing. Output is reviewed for artifacts, unnatural expressions, or audio glitches. This is where human judgment matters most.

Step five is disclosure and deployment. Responsible use includes clear labeling or contextual transparency, especially in public-facing content.

Skipping any of these steps usually leads to poor quality—or ethical backlash.

Tools, Platforms, and Honest Comparisons

Deepfake technology tools range from consumer-friendly apps to professional-grade platforms. Choosing the right one depends on your goals and risk tolerance.

Free tools often prioritize ease of use. They’re fine for experimentation but limited in control and output quality. Many also retain user data, which raises privacy concerns.

Paid platforms offer better results, customization, and support. They’re commonly used in film, advertising, and enterprise settings. Some integrate with broader AI ecosystems developed by companies like Google and OpenAI, although deepfake-specific tools often operate independently.

When comparing tools, evaluate:

- Data ownership policies

- Output realism

- Ethical safeguards

- Export rights

- Support and documentation

Professionals don’t chase the newest tool—they choose the one that aligns with their responsibility to users and audiences.

Common Mistakes People Make with Deepfake Technology (And How to Fix Them)

The most common mistake is underestimating the ethical dimension. Just because you can generate something doesn’t mean you should. Always start with consent and disclosure.

Another frequent error is poor data quality. Low-resolution images or noisy audio lead to uncanny results. Invest time upfront in clean inputs.

Many beginners also over-automate. Deepfake technology still needs human oversight. Automated outputs without review often contain subtle errors that destroy credibility.

Finally, people forget the legal landscape. Laws around synthetic media are evolving fast. Misuse can carry serious consequences, even if intentions weren’t malicious.

Fixing these mistakes requires patience, transparency, and respect for the technology’s impact.

The Future of Deepfake Technology: Where We’re Headed

Deepfake technology is evolving toward realism, accessibility, and regulation all at once. Detection tools are improving, but so are generation models, creating a constant arms race.

We’re likely to see clearer labeling standards, platform-level disclosures, and stronger digital identity verification. Social platforms like TikTok and others are already experimenting with synthetic media tags.

At the same time, positive use cases will expand. Virtual tutors, digital historians, and personalized entertainment will become normal. The technology itself isn’t the villain—how we choose to use it will define its legacy.

Conclusion

Deepfake technology isn’t a passing trend. It’s a foundational shift in how digital reality is created and perceived. When used responsibly, it unlocks creativity, accessibility, and efficiency we’ve never had before. When abused, it erodes trust and causes harm.

The difference lies in intent, transparency, and education. By understanding how deepfake technology works—and respecting its power—you’re better equipped to use it wisely, spot misuse, and contribute to a healthier digital ecosystem.

If this guide helped clarify the topic, consider sharing it, exploring ethical tools, or joining conversations about responsible AI. Awareness is the first layer of defense.

FAQs

It’s used in entertainment, marketing, accessibility tools, education, and customer service, alongside less ethical uses like scams and misinformation.

The technology itself is legal, but misuse—especially without consent—can violate privacy, fraud, or defamation laws.

Detection tools exist, but no method is foolproof. Human skepticism and context remain essential.

No. Audio-only deepfakes, especially voice cloning, are increasingly common.

Yes. Quality, speed, and accessibility improve every year, which is why ethical frameworks matter.