Big data technology is one of those terms that sounds abstract until you realize it’s already shaping the choices you make every single day—what you watch, what you buy, how long your delivery takes, even which route your ride-hailing app chooses. In the first few years of my career, I watched teams drown in spreadsheets while executives begged for “better insights.” Fast forward to today, and organizations that truly understand big data technology aren’t just surviving—they’re quietly outpacing everyone else.

This guide is written for real humans, not buzzword collectors. If you’re a founder, marketer, analyst, engineer, student, or decision-maker trying to understand what big data technology actually is, how it works, and how to use it responsibly and profitably, you’re in the right place. We’ll break down concepts with plain-language analogies, share real-world use cases, walk through a step-by-step implementation path, compare tools honestly, and call out the mistakes I’ve personally seen derail promising projects.

By the end, you’ll know when big data technology is worth the investment, how to approach it without overengineering, and how to turn raw data into decisions that matter.

What Is Big Data Technology (And Why It’s Different From “Regular” Data)?

Big data technology refers to the systems, tools, and architectural approaches used to collect, store, process, analyze, and act on extremely large, fast-moving, and diverse datasets that traditional databases simply can’t handle.

A helpful analogy:

Traditional data systems are like filing cabinets. Big data technology is an automated logistics warehouse—robots, conveyors, sensors, and analytics all working together in real time.

The Five Vs That Actually Matter in Practice

Most introductions stop at buzzwords, but these characteristics genuinely shape technical and business decisions:

Volume

We’re talking terabytes to petabytes of data—from clicks, sensors, transactions, logs, videos, and more. The challenge isn’t just storage; it’s finding value without collapsing under cost.

Velocity

Data often arrives continuously: live user activity, financial trades, IoT sensor streams. Big data technology is designed to ingest and process data as it flows, not just overnight.

Variety

Structured tables are now the minority. Modern data includes text, images, video, JSON events, audio, and machine logs. Big data platforms are built to handle this messiness.

Veracity

Not all data is clean, accurate, or complete. A core job of big data technology is filtering noise, handling missing values, and managing uncertainty.

Value

This is the most important V. If data doesn’t lead to better decisions, automation, or insight, it’s just expensive clutter.

Why Traditional Databases Fall Short

Relational databases are fantastic for predictable, structured workloads. They struggle when:

- Data volume grows faster than hardware

- Schema changes frequently

- Real-time processing is required

- Costs explode with scale

Big data technology emerged to solve these exact pain points through distributed systems, horizontal scaling, and fault tolerance.

Big Data Technology in the Real World: Benefits and Use Cases That Actually Pay Off

When done right, big data technology doesn’t just improve reports—it changes how organizations think and act.

Business Benefits You Can Measure

Faster, smarter decisions

Instead of waiting weeks for reports, teams can respond to trends as they happen.

Operational efficiency

Predictive maintenance, demand forecasting, and workflow optimization reduce waste and downtime.

Personalization at scale

From product recommendations to targeted messaging, big data enables relevance without manual effort.

Risk reduction

Fraud detection, anomaly spotting, and compliance monitoring become proactive instead of reactive.

Innovation leverage

New products, pricing models, and services emerge once data becomes accessible and trustworthy.

Industry-Specific Use Cases

Retail & E-commerce

- Real-time recommendation engines

- Dynamic pricing based on demand

- Inventory optimization across locations

Healthcare

- Predictive patient risk models

- Population health analytics

- Medical imaging analysis

Finance

- Fraud detection in milliseconds

- Credit scoring using alternative data

- Market sentiment analysis

Manufacturing & IoT

- Predictive equipment maintenance

- Quality control through sensor data

- Supply chain optimization

Marketing & Media

- Audience segmentation at scale

- Content performance analytics

- Attribution modeling across channels

The common thread: big data technology turns complexity into competitive advantage.

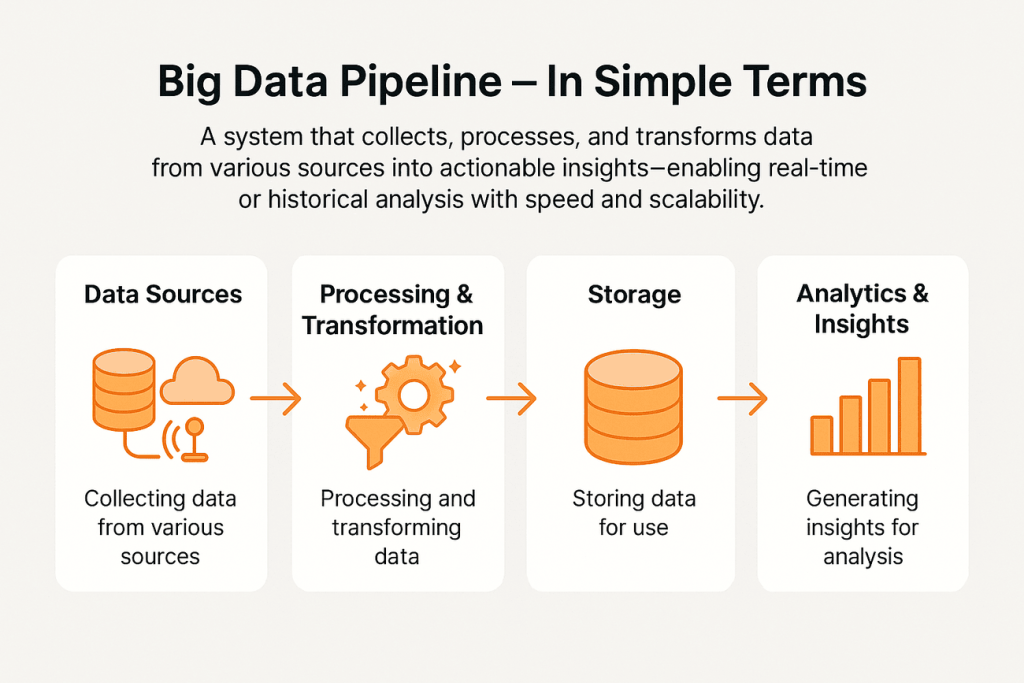

How Big Data Technology Works: A Clear, Step-by-Step View

Understanding the lifecycle removes most of the intimidation.

Step 1: Data Ingestion

This is how data enters your system. Sources include:

- Web and app events

- Transactional databases

- IoT devices and sensors

- APIs and third-party platforms

Tools often used here support batch and real-time streaming so you don’t lose data when traffic spikes.

Step 2: Distributed Storage

Instead of one big server, data is spread across many machines. This enables:

- Horizontal scaling

- Fault tolerance

- Cost efficiency with commodity hardware

Popular approaches include distributed file systems and cloud object storage.

Step 3: Data Processing

This is where raw data becomes usable. Processing can be:

- Batch (large jobs run periodically)

- Stream-based (continuous, near-real-time)

Transformations include cleaning, joining, aggregating, and enriching data.

Step 4: Analytics and Modeling

Once processed, data is analyzed using:

- SQL-like queries

- Statistical analysis

- Machine learning models

- Visualization tools

This step is where insight is extracted.

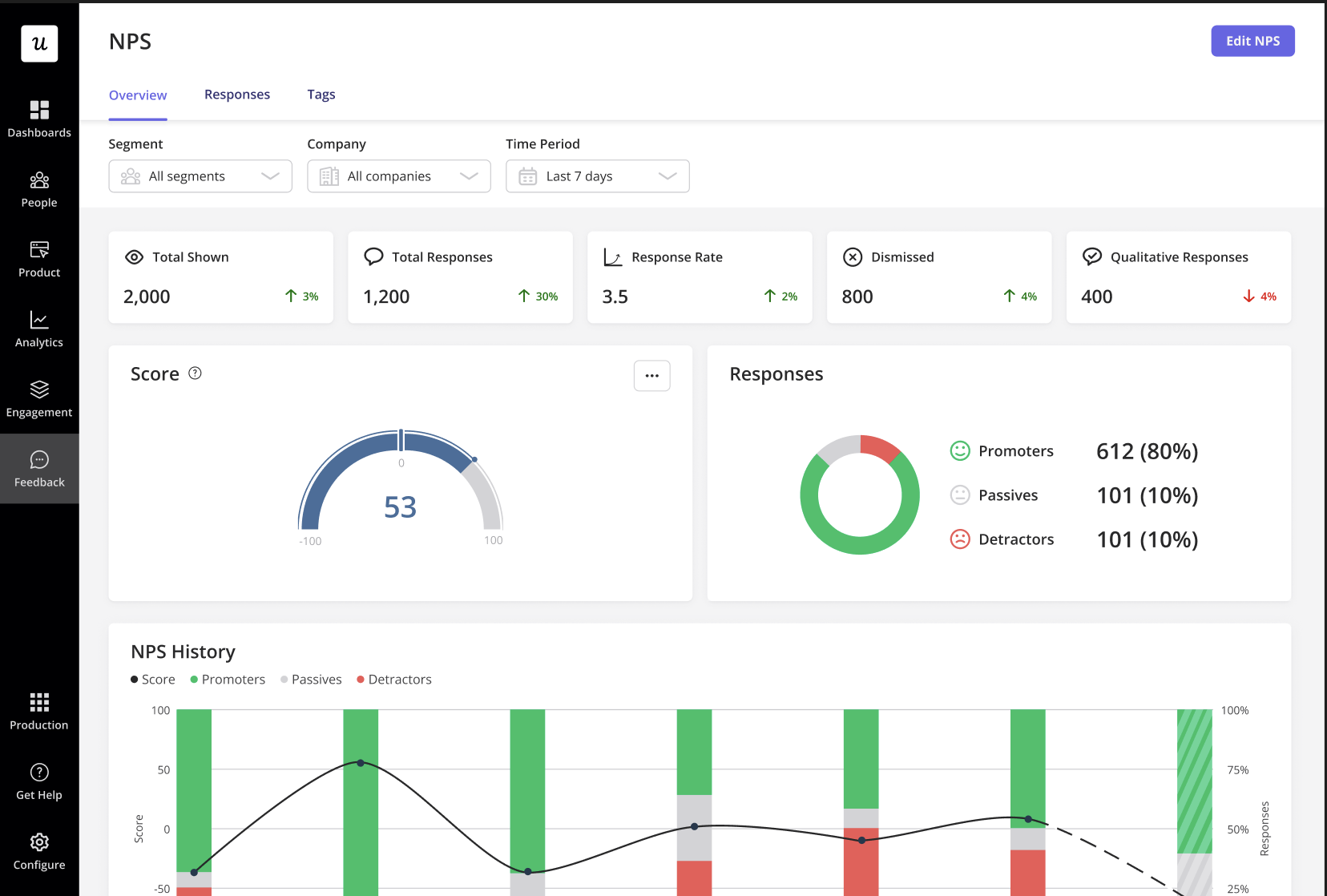

Step 5: Action and Integration

The final step is often ignored—and that’s a mistake. Insights must feed back into:

- Dashboards

- Automated systems

- Business workflows

- Product features

If insights don’t change behavior, the system has failed.

Core Components of a Modern Big Data Technology Stack

Data Lakes vs Data Warehouses

Data Lakes

Store raw data in its native format. Flexible, scalable, and ideal for exploration.

Data Warehouses

Store structured, cleaned data optimized for analytics and reporting.

Most mature systems use both—lakes for ingestion and experimentation, warehouses for trusted reporting.

Distributed Computing Engines

Frameworks like Apache Hadoop and Apache Spark allow massive datasets to be processed in parallel. Spark, in particular, is favored for its speed and support for machine learning workloads.

Streaming Platforms

Tools such as Apache Kafka handle real-time data flows reliably and at scale.

Cloud Platforms

Cloud providers abstract away much of the infrastructure complexity, offering managed big data services that scale on demand.

A Practical Step-by-Step Guide to Implementing Big Data Technology

This is the part most articles skip. Here’s the real-world approach that actually works.

Step 1: Start With a Business Question

Never start with tools. Start with questions like:

- What decision do we struggle to make?

- Where are we guessing instead of knowing?

- Which process costs the most time or money?

Step 2: Audit Your Existing Data

Most organizations already have more data than they realize. Identify:

- Data sources

- Data owners

- Data quality issues

- Access limitations

Step 3: Choose a Scalable Architecture

Design for growth, not perfection. Cloud-based, modular architectures allow you to evolve without rewriting everything.

Step 4: Build a Minimal Viable Pipeline

Ingest → store → process → visualize. Keep it simple. Prove value before expanding.

Step 5: Invest in Data Quality Early

Bad data scales just as fast as good data. Validation, monitoring, and documentation save months later.

Step 6: Enable Self-Service Analytics

Empower teams to explore data without bottlenecks. This multiplies ROI.

Step 7: Iterate Relentlessly

Big data technology is not a one-time project. It’s a living system.

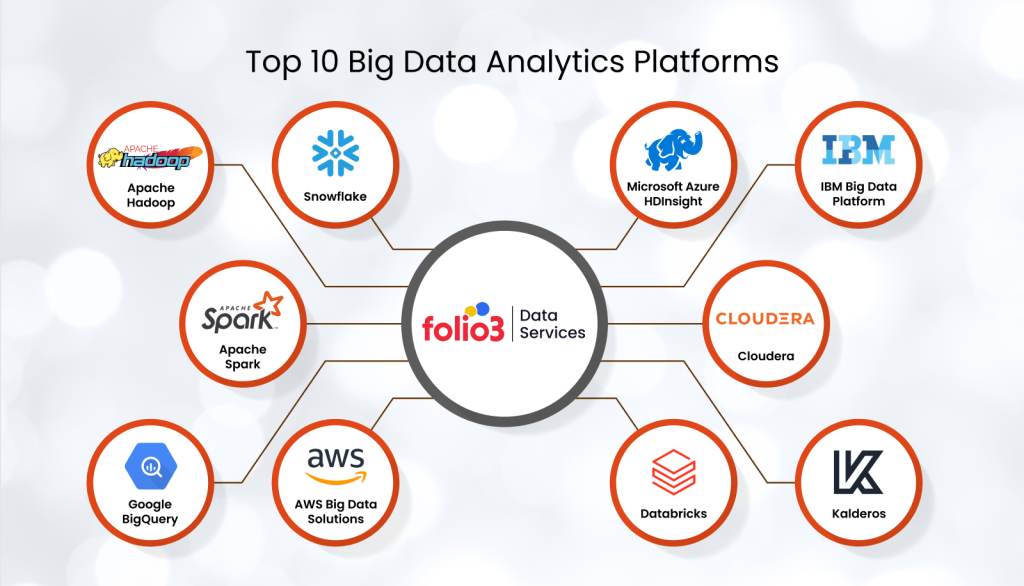

Tools, Platforms, and Honest Comparisons

Open-Source Tools

Pros:

- Cost-effective

- Highly customizable

- Large community support

Cons:

- Requires skilled teams

- Maintenance overhead

Examples:

- Apache Hadoop

- Apache Spark

- Apache Kafka

Cloud-Managed Solutions

Pros:

- Faster setup

- Automatic scaling

- Reduced operational burden

Cons:

- Ongoing costs

- Vendor lock-in risks

Examples:

- AWS Big Data Services

- Google BigQuery

- Azure Synapse

Visualization and BI Tools

These bridge the gap between data and decisions. Choose tools your teams will actually use.

Common Big Data Technology Mistakes (And How to Fix Them)

Mistake: Collecting everything “just in case”

Fix: Tie every dataset to a business purpose.

Mistake: Overengineering early

Fix: Start small, prove value, then scale.

Mistake: Ignoring data governance

Fix: Define ownership, access controls, and documentation from day one.

Mistake: Treating big data as purely technical

Fix: Involve business stakeholders continuously.

Mistake: Underestimating costs

Fix: Monitor usage, storage, and compute aggressively.

Big Data Technology and AI: Where the Real Power Lives

Big data technology and AI reinforce each other. Large datasets fuel better models, and AI extracts deeper insight from complex data.

If you want a clear explainer on this intersection, this YouTube overview is a solid starting point:

The Future of Big Data Technology

Expect trends like:

- Real-time analytics becoming standard

- Increased automation of data pipelines

- Stronger focus on privacy and ethics

- Deeper integration with AI systems

The winners won’t be those with the most data—but those who use it wisely.

Conclusion: Turning Big Data Technology Into Real Advantage

Big data technology isn’t about hoarding information or chasing trends. It’s about clarity—seeing patterns others miss and acting with confidence. The organizations that succeed are rarely the loudest. They’re the ones quietly building systems that learn, adapt, and improve every day.

If you take one thing away from this guide, let it be this: start with people and problems, then let technology serve them—not the other way around.

If you have questions, experiences, or lessons learned with big data technology, share them. Real insight grows through conversation.

FAQS

No. Cloud platforms make it accessible to startups and small teams when used strategically.

Big data focuses on infrastructure and scale. Analytics focuses on extracting insight.

Technical skills help, but modern tools increasingly support low-code and no-code access.

It can be—but careful architecture, cloud cost controls, and clear goals keep it manageable.